In-depth Linux Guide to Achieve PCI DSS Compliance and Certification

The standard itself is very detailed. Still, it sometimes unclear on what specifically to implement and when. This guide will help with translating the PCI standard to technical security controls on Linux systems.

This document has the goal to help you further secure your network and pass the PCI DSS audit. It is important to note that this guide is a set of generic tips. Your IT environment might require additional security measures. Always consult your auditor when it comes to interpreting the PCI DSS requirements and how it relates to your systems and network.

This article is based on the current version of PCI DSS

, which is now version 3.2.1 (May 2018). Discovered something outdated or incorrect? Let it know!

- Focus area: PCI DSS auditing for Debian, Ubuntu, CentOS, RHEL, and other Linux distributions

- Audience: system administrators, IT auditors and security professionals

PCI DSS requirements for Linux systems

For every compliance standard, the web is full of information about it. Still, there is a lot of confusion about how to interpret things, especially when it comes down to the details. PCI DSS is no exception here. Whenever you are a system administrator, the IT manager, or a Qualified Security Assessor (QSA), we all have different ideas regarding the details.

Having different ideas regarding the implementation might be caused due to the previous experiences we had. Or the opposite, lacking specific knowledge of a particular subject. Whatever the reason, it is valuable to have more detailed resources available. Unfortunately, most websites provide only the basics. Or they are simply created for marketing purposes. In particular for Linux systems, and how several sections apply to the PCI DSS standard, there isn’t much quality content available.

Why we wrote this guide

To help people with their compliance journey, we invested a lot of time to write this guide. It is now getting filled with tips, examples, and implementation ideas. We don’t focus just on the system administrator, but also on the IT auditor. This way both parties benefit from the knowledge and can help each other to get companies becoming PCI DSS certified using Linux systems.

This guide itself has been written to support one of the best-known and open source auditing utility for Linux/Unix systems, named Lynis. It is the tool written by us, to help with performing security audits. While we will advise many tools in this guide, we will sometimes point out when Lynis in particular can help. Not because we want to promote it more than others, but because we know more about it.

Before we go into the technical details, let’s get a better picture first about PCI DSS. It is good to know why it is there, and where (and how) it is related to Linux systems.

Payment industry

Every system should have a business purpose, or at least supporting the business. This includes production systems, spare systems, or even test systems. All these systems play an important role in your day-to-day business. If you are depending on being PCI DSS compliant, you better keep your IT environment, including these machines, in a healthy condition. Your business partners, customers, and colleagues depend on it. Payments are about trust for all parties involved. By applying the right security measures, we can ensure a high level of trust, or detect and analyze when one of these measures failed.

The auditor: Friend or Foe?

The role of the auditor has a special smell. Some technical people really dislike auditors, as they feel their work is being judged or criticized. Others like the auditor, as they openly discuss subjects with management, which was previously ignored.

If you are a system administrator

First lesson: don’t take things personally. The auditor is there to determine how well policy and processes are being executed, combined with the effectiveness of the related technical controls. It is not about you, or how well you do your work. It is better to leverage the knowledge of the particular auditor and see how he can help you achieve more things.

If you are an auditor

Respect the environment in which technical people have to work. Politics, management, and limited training budgets have an impact on technical people. They want to achieve their best work, with the available resources. Especially regarding Linux, things can be done in many ways. Instead of demanding some information to be available, consider the impact it may have on the day-to-day job systems administrators have. Help them to get the right resources and involve their manager. For example, the purchase of an automation or auditing tool can be of great help.

Security automation

Systems are like us. They need to be properly maintained to function properly. The PCI DSS standard defines many security safeguards, including specific configuration settings. You should carefully look how every security measure fits in your own security policy, but also within the technical capabilities and knowledge you have.

Besides the tips we share in this article, we also suggest implementing the right tooling, to support you. Making manual changes is fine, doing it automated is (usually) better. Let’s cover first some guidelines regarding configuration management. This might be of great help in becoming and staying compliant with PCI DSS.

Configuration management

During the life cycle of systems, we tend to change them step by step. This might introduce the concept of “configuration drift”. This is a fancy term for the situation in which a system is changed step by step, to a point where it is unclear what status is actually right. This becomes especially an issue when we have multiple systems, which we expect to be configured in a similar way, yet they all differ slightly: configuration drift.

Alignment with security policies

To prevent configuration drift, we need a way to keep them in a controlled state. On a technical level, we need the right tools to check, adjust and monitor changes. Having the right tools won’t cut it though. Equally important is having the right policy available, to define the ‘what’ and the ‘why’.

To get the policy and the technical tools aligned, a baseline is of great help. This baseline, or minimum rule set, defines what is expected from the individual configuration pieces on a system. Next step is to use tools to check system configurations and report any differences found.

- Policies

- Baselines

- Tools

With the PCI DSS standard mandating a lot of individual controls to be in place, automation is key. Instead of changing each system by hand, it makes sense to invest in automation tools and enforce the policies. Such configuration management tools for Linux include Ansible, CFEngine, Chef, Puppet, and Salt.

Automated auditing

When the right automation tools are in place for configuration management, it is time to do the same for auditing. Discrepancies between a policy (or baseline) and technical configurations should be detected as soon as possible. This usually makes it easier to correct the difference and bring it in line with the preferred state. Where configuration management tools do the correction, we need a more in-depth and specialized piece of software to do the detection. Here come auditing tools like Lynis

into play. Or you could use your own internal tooling and audit scripts to perform these checks.

Requirement 1

Networking

Systems are linked together via internal or external networks, like the internet. PCI DSS recognizes the fact that network connectivity should be properly protected, especially from untrusted network segments. While most of PCI DSS requirement 1 applies to network components, some of it may also apply to Linux systems.

Firewall configurations

When a firewall is present on Linux systems itself, it is usually iptables. Newer installations might have nftables instead.

lsmod | grep table

Any matches might indicate if iptables (or nftables) is active as a kernel module. If so, the configuration should be checked. In the case of iptables there can be a running configuration, and one stored on disk. Preferably these have the same configuration, with the exception for dynamically learned tables. A good example might be a dynamic blacklist, which gets filled with an external tool (like fail2ban).

Insecure services

PCI section 1.1.6b states to identify insecure services. Where possibly these services and the related protocols should be disabled. It was common in the past seeing protocols sharing authentication credentials and other sensitive data, without any form of encryption. This does not make the protocol itself insecure, yet makes data susceptible for capturing by unauthorized parties. This results in an insecure service, which needs careful consideration when being used or implemented.

Some services include limitation of its access, like using access control lists of IP filtering methods. It might make the service still being acceptable in some environments. Where possible, insecure services should be replaced with a more secure alternative. For example, all Linux distributions use now SSH by default for remote administration. A protocol like telnet should therefore no longer be used. The “r” services like rexec, rlogin, rcp, and rsh, are insecure as well.

Examples of commonly seen unencrypted protocols, plus their secure alternatives:

- FTP (FTPS, SFTP, SCP)

- HTTP (HTTPS)

- IMAP (IMAPS)

- POP3 (POP3S)

- SNMP v1/v2 (SNMP v3)

- Telnet (SSH, Mosh)

To determine what protocols are enabled on a system, the netstat or ss utility can be used.

netstat -nlp

Systems which have no netstat utility available can use ss instead. For example to display listening TCP connections:

ss -lnt

To see the UDP connections, use the -u flag.

ss -lnu

After gathering the list of listening services, careful consideration should be given to what services might be unneeded for the system. Disabling services is the quickest and best way to reduce the attack surface of the system.

Some services might be listening on all interfaces (0.0.0.0 for IPv4 or :: for IPv6), while just needed locally. In that case, the related listen statement should be adjusted to 127.0.0.1 or ::1, depending if you are using IPv6.

Requirement 2

Vendor-supplied defaults and security parameters

One of the most common attack vectors is trying to use default supplied default passwords and settings. From the typical “admin:admin” combination, up to a default SNMP community string. Whenever there is a password or secret value involved, it should not be the one provided by the supplier or maintainer of the software. Instead, you should change these values to something in line with your own security policies.

Insecure protocols

The last couple of years several protocols have been proofed to be too weak for proper protection of sensitive data. This resulted for example in the POODLE attack, which made effectively SSLv3 a protocol which should be banned. Because of its usage in web services, PCI section 2.2.3 specifically states that SSLv3 and early TLS versions should no longer be used.

Apache

Disable SSLv2 and SSLv3 on Apache installations by adding the SSLProtocol option, specifying which protocols NOT to use.

SSLProtocol all -SSLv2 -SSLv3

The related configuration file depends on the Linux distribution.

- CentOS: /etc/httpd/conf/httpd.conf

- Debian: /etc/apache2/httpd.conf

dovecot

ssl_protocols = !SSLv2 !SSLv3

nginx

Only enable the newest TLS versions by using the ssl_protocols directive. Add it to the configuration file (e.g. /etc/nginx/nginx.conf), and apply it to the http context. See more details in the nginx SSL module

.

ssl_protocols TLSv1.2 TLSv1.3;

Postfix

For the configuration of Postfix, the protocols should specifically be blocked by using an exclamation sign.

smtpd_tls_mandatory_protocols=!SSLv2,!SSLv3

smtp_tls_mandatory_protocols=!SSLv2,!SSLv3

smtpd_tls_protocols=!SSLv2,!SSLv3

smtp_tls_protocols=!SSLv2,!SSLv3

When using Postfix within your environment, have a look at the Postfix hardening guide.

Other common programs which might need attention are:

- Mail daemons

- Download utilities like cURL and wget

If you are the auditor:

The first step is collecting the list of installed packages and running processes. Then search for known packages and processes which have SSL/TLS used. If you really want to cover every part of the system, consider analyzing the available binaries on the system. Those binaries using SSL/TLS libraries might need specific configuration to disable older protocols. Please note that not all utilities support configuration, or simply use the settings during compilation time.

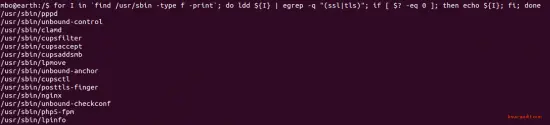

for I in $(find /usr/sbin -type f -print); do ldd ${I} | egrep -q "(ssl|tls)"; if [ $? -eq 0 ]; then echo ${I}; fi; done

Example output:

Requirement 5

Vulnerability management

Systems should be protected against malicious software components, known as malware. While getting infected with adware on a Linux system has a very small chance, it is possible that a system will end up with a backdoor or rootkit. While prevention is always a preferred option, we have to ensure that adequate detection mechanisms are in place.

Malware scanning for Linux

PCI DSS section 5.1 describes the need for an anti-virus solution. This subject is definitely controversial for Linux administrators, as viruses on Linux-based systems are rare. Still, the platform is not fully resistant to different forms of malware and the related threats. So depending on the particular goal of a system, one or multiple tools can be a good fit.

Open source tools

- Generic: ClamAV

- E-mail: ClamAV

- PHP: LMD

- Rootkit detection: chkrootkit or rkhunter

Note: Many commercial malware scanners are available nowadays. Each scanner has their strength and weaknesses in detecting malware threats on Linux.

ClamAV

One of the most commonly used malware scanners on Linux is ClamAV. Like any other anti-virus/malware solution, it should be kept up-to-date. This can be achieved by running the freshclam utility. Determining the configuration settings of ClamAV and its individual components, use the clamconf command. It also shows statistics and helps to determine if the malware definitions are up-to-date.

Overview of most common ClamAV utilities:

- clamscan / clamdscan - client for scanning files and directories

- clamd - daemon process for on-demand scanning

- clamconf - show configuration of ClamAV components

- freshclam - update ClamAV malware definitions

Linux Malware Detect (LMD)

Another great addition to ClamAV is using LMD or Linux Malware Detect. It is released under GPLv2 and can actually leverage the scanning power of ClamAV. It can use the inotify functionality of Linux, to scan new and modified files. The focus of this malware scanner is common types seen on Linux, including PHP backdoors and rootkits. LMD is especially useful for systems running web services, like shared hosting providers.

Commercial malware scanners

Here are some examples of commercial vendors that have a scanner which might work on Linux or macOS:

- Avast

- Bitdefender

- Cylance

- McAfee

- Sophos

- Trend Micro

Malware checklist for PCI DSS and Linux

- Regular scans via cronjob

- Proper logging (preferable to syslog)

- Up-to-date virus definitions

More information

Requirement 6

Develop and maintain secure systems and applications

The requirements in this section are mostly non-technical, like determining which procedures are in use, and confirmation of their effectiveness.

Requirement 7

Restrict access to cardholder data by business need to know

These are mostly non-technical requirements.

Requirement 8

Authentication

Systems need to be maintained, which requires legitimate users to have access to them. To ensure this is possible in a secure way, Linux systems have different ways to store account details and authenticate these users. The most obvious ways to access a system is local and remote (e.g. with SSH). With the help of proper account management and authentication controls, access is granted to those who need it, while blocking others without this need.

Within the PCI DSS requirements, there are several controls which highlight the need for proper access, protection, and logging changes. This includes system configuration files (e.g. to PAM or SSH), but also to the logging itself. In other words, we should take appropriate measures to safeguard these configurations itself as well.

Inactive accounts

Unused or inactive accounts on the system might be an unneeded security risk. This kind of accounts usually exists because there was a one-time need to log in, or simply forgotten after an employee left the company. PCI describes in section 8.1.4 that accounts older than 90 days and are unused, should be removed.

To determine the last time a user logged in, the last command can be used. Information is stored in /var/log/wtmp or rotated files like /var/log/wtmp.1.

Note: It is common to find the wtmp files being rotated. Requirement 10.7 specifies that information should be available (online) for at least 3 months. Ensure that enough copies are being stored on the system itself, or available on a central logging server.

Pluggable Authentication Modules (PAM)

Users need a way to present themselves to a Linux system. This process is called authentication and is done using Pluggable Authentication Modules (PAM). The PCI DSS standard does not describe how PAM should be configured, but it gives several pointers regarding:

- Password history (8.2.5a password reuse)

- Password strength (8.2.3a password minimum length and character types)

- Password lockout and release (8.1.7 lockout duration)

The PAM configuration differs between Linux distributions, so it is important to make the right changes, and to the right files. Of similar importance is to perform an in-depth test, to ensure the system works as expected.

Password file integrity

Linux uses the common password file named /etc/passwd, together with a “shadow copy” in /etc/shadow. To ensure the integrity of the system, we should consider the file integrity of these files first. PCI describes that the storage and handling of these files should be done in a secure way. What does this mean? We could start with the file permissions of these files.

File permissions of password files

Ubuntu

- /etc/passwd (644, owner root, group root)

- /etc/shadow (640, owner root, group shadow)

The “other” group should never have read access to the shadow file, as it contains the hashed passwords.

Safe storage of passwords

Within the requirements of PCI DSS section 8.2.1, it is stated that passwords should be properly safeguarded. Passwords should be unreadable (8.2.1b) and protected with the right cryptographic algorithms (8.2.1a).

Related Check: view the contents of /etc/shadow and determine if no passwords are stored cleartext. Also, analyze the second column and see if it starts with $5$ (SHA-256) or $6$ (SHA-512).

Unique IDs

Then there is the uniqueness of the IDs listed in the files. Also, the correlation between IDs in one file which should match with the IDs in the other file.

Integrity tests for password files

A very quick and powerful method to test both /etc/passwd and /etc/shadow is by using the pwck utility. It covers a wide variety of tests:

- the correct number of fields

- a unique and valid username

- a valid user and group identifier

- a valid primary group

- a valid home directory

- a valid login shell

- every passwd entry has a matching shadow entry

- every shadow entry has a matching passwd entry

- passwords are specified in the shadowed file

- shadow entries have the correct number of fields

- shadow entries are unique in shadow

- the last password changes are not in the future

Command to test:

pwck -r

This command will show the results of each of the mentioned tests. Not all output from this command is directly a finding. For example, missing home directories are showed as well.

Password history

To prevent users from reusing the same passwords over and over, a password history should be applied according to section 8.2.5 of the PCI standard. It should at least store the last 4 passwords, to ensure these aren’t used by the account owner.

Previous passwords can be stored with the help of the modules pam_unix and pam_pwhistory. Check your PAM configuration and see if it consists these modules, including the remember parameter.

For environments which apply LDAP, NIS or Kerberos, this configuration might not be applied to the end systems itself, but on the central authentication server.

Password strength

Passwords are still an important piece in the authentication process. Define a minimum password length and strength.

Two-factor authentication

Central access points to the network, like jump servers or stepping stones, should be additionally guarded. One requirement is two-factor authentication (8.3) for entry points outside the network.

Two-factor authentication is usually arranged via PAM. Depending on the solution, the related module needs to be installed, configured and tested. Because PAM uses “authentication stacks”, ensure that modules are properly evaluated in the right order, with the appropriate control flag, like required or sufficient.

Related modules:

- pam_google_authenticator.so

- pam_yubikey.so

Password changes

PCI section 8.2.4 states that password and passphrases should be changed every 90 days. Changing passwords on a regular basis reduces the change of successful brute forcing cracking of passwords. It also helps with determining with inactive accounts (section 8.1.4 Remove/disable inactive user

accounts within 90 days).

One way to test this is by checking the shadow file.

X=$(($(date --utc --date "$1" +%s)/86400-90)); awk -v BEFORE="$X" -F: '{ if ($2 ~ /^\$/ && $3 < BEFORE) {print $1 }}' /etc/shadow

How it works:

- Gather current date and convert it to days since 1 January 1970

- Extract 90 days of this number

- Open /etc/shadow file with awk

- Filter entries which start with $5$ or $6$ in the password field, and are older than 90 days

Logging changes of password files

Changes to password files should be logged. The Linux audit framework is a great solution for this. Since there is a lot to audit, refer to audit trails section below.

Shell

The shell is definitely one of the most common parts for Linux administrators. Like personal preferences, there are multiple shells available for Linux systems. The first step is to ensure that all shells are accounted for. Determine which ones are installed and via /etc/shells which ones are allowed.

Session Timeout in Linux shells

Depending on the shells available on the system, a timeout should be configured with the appropriate value. PCI section 8.1.8 (session idle timeout) mandates that after 15 minutes, or 900 seconds, an idle session is being terminated.

One of the files to arrange this is /etc/profile . By using typeset together with the TMOUT variable, we can determine this idle session time, resulting in automatically logging out the user.

typeset -r TMOUT=900

Tips for Requirement 8 on Linux

Validation can usually quickly done by reviewing the PAM, SSH, and authentication system. Due to the complexity around PAM, ensure that the configuration is correct and tested.

Automation tip: Lynis can take care of most validation steps in requirement 8 of PCI DSS.

Paths and files:

- /etc/pam.d

- /etc/ssh

Requirement 10

Audit Trails

PCI section 10.7 covers the need for an audit trail. On Linux we have two common options:

- Normal logging

- Audit events

- Accounting details

Normal logging

The most common type of normal logging on Linux is by using syslog. It is still a popular way to store information, varying from boot information, up to kernel events and software related information.

For Linux logging, it is important to check the “health” of the logging configuration itself. This determines what happens with events, like which events to capture and what to ignore. You don’t want to log too much. This will fill up disks and makes troubleshooting and investigation only harder. On the other hand, you don’t want to miss out on important events either.

The knowledge of the system administrator comes here in handy. He or she usually knows best what files are containing sensitive information or need additional protection.

Important areas:

- syslog rotation

- systemd

Log rotation

Depending on how many transactions and the level of details are stored, the amount of disk space occupied by log files can be huge. Proper log rotation should be in place, without destroying previously stored data (e.g. removal or being overwritten).

Systemd logging

Newer Linux systems will be using systemd as their service manager. These systems will also be using journald, a journal logging utility. It is not yet a full replacement, as some information will be stored in both, where other information is only available in one of the two. When setting up (and auditing) a system with systemd, ensure to check the configuration of both syslog and journald.

Linux Audit System

Besides logging, the system can collect audit events. Systems running Linux usually have support for the audit framework enabled. As this a very extensive topic, we suggest following up on these individual articles:

- Linux Audit Framework 101 - Basic Rules for Configuration

- Tuning auditd: High Performance Linux Auditing

The Linux audit framework can be used to monitor many parts of the PCI DSS requirements, like changes to files, or access to confidential data. Since it is easy to go overboard with all the things you can watch, it is highly suggested to also perform optimizing of the audit rules. As always, test them carefully, to ensure all events are properly recorded.

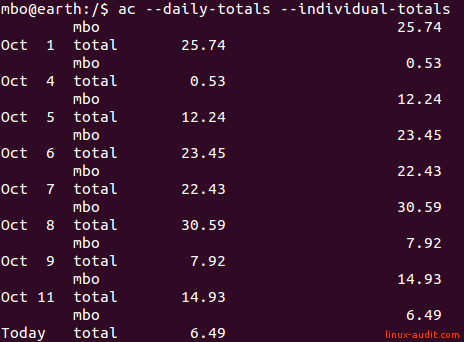

Accounting

Another category of data to store is accounting details. This might be used for billing, troubleshooting or for further processing later. Accounting details are usually stored for actions performed by users, like running a particular process, or the connect time to a system. You should ensure that accounting details are equally treated as logging and audit information. It can provide a valuable resource during investigations like troubleshooting or incident response on Linux.

Output of ac command showing connect times

Notes

If you liked this guide, please share it with your peers. This way the requirements of the PCI DSS standard can be shared across more Linux system administrator and IT auditors.

The PCI DSS standard, logo and some of the linked resources are copyrighted by the PCI Security Standards Council, LLC. This guide is work based on the related standard and a guideline. Before implementing security controls on systems within your PCI scope, always consult your own auditors first, to determine if the related controls are in line with the requirements.